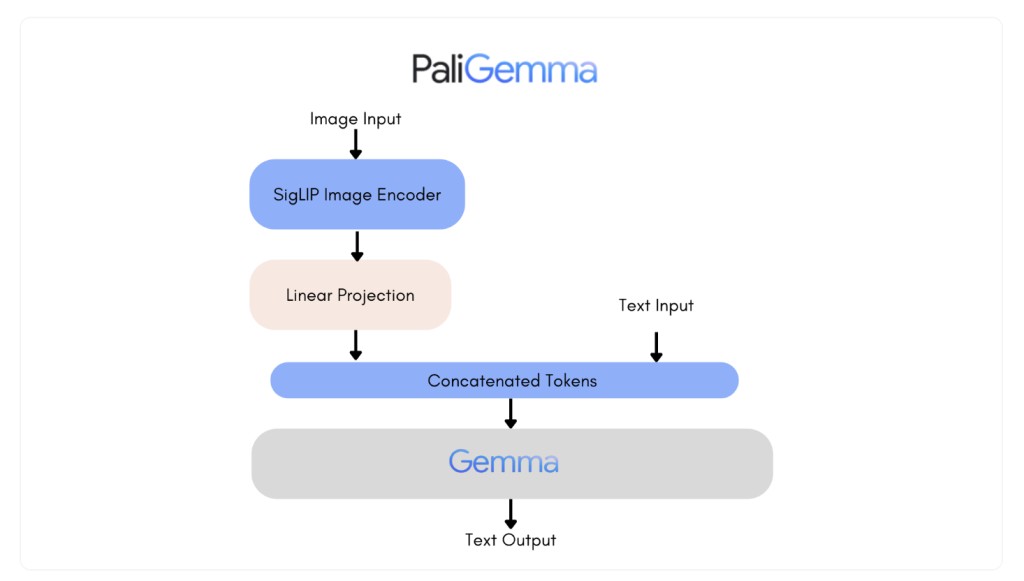

Google has released a new family of vision language models called PaliGemma. PaliGemma can produce text by receiving an image and a text input. The architecture of the PaliGemma (Github) family of vision-language models consists of the image encoder SigLIP-So400m and the text decoder Gemma-2B. A cutting-edge model that can comprehend both text and visuals is called SigLIP. It comprises a joint-trained image and text encoder, similar to CLIP. Like PaLI-3, the combined PaliGemma model can be easily refined on downstream tasks like captioning or referencing segmentation after it has been pre-trained on image-text data. Gemma is a text-generating model that requires a decoder. By utilizing a linear adapter to integrate Gemma with SigLIP’s image encoder, PaliGemma becomes a potent vision language model.

Big_vision was used as the training codebase for PaliGemma. Using the same codebase, numerous other models, including CapPa, SigLIP, LiT, BiT, and the original ViT, have already been developed.Â

The PaliGemma release includes three distinct model types, each offering a unique set of capabilities:

PT checkpoints: These pretrained models are highly adaptable and designed to excel in a variety of tasks. Blend checkpoints: PT models adjusted for a variety of tasks. They can only be used for research purposes and are appropriate for general-purpose inference with free-text prompts.

FT checkpoints: A collection of refined models focused on a distinct academic standard. They are only meant for research and come in various resolutions.

The models are available in three distinct precision levels (bfloat16, float16, and float32) and three different resolution levels (224×224, 448×448, and 896×896). Each repository holds the checkpoints for a certain job and resolution, with three revisions for every precision possible. The main branch of each repository has float32 checkpoints, while the bfloat16 and float16 revisions have matching precisions. It’s important to note that models compatible with the original JAX implementation and hugging face transformers have different repositories.

The high-resolution models, while offering superior quality, require significantly more memory due to their longer input sequences. This could be a consideration for users with limited resources. However, the quality gain is negligible for most tasks, making the 224 versions a suitable choice for the majority of uses.

PaliGemma is a single-turn visual language model that performs best when tuned to a particular use case. It is not intended for conversational use. This means that while it excels in specific tasks, it may not be the best choice for all applications.

Users can specify the task the model will perform by qualifying it with task prefixes like ‘detect’ or ‘segment ‘. This is because the pretrained models were trained in a way to give them a wide range of skills, such as question-answering, captioning, and segmentation. However, instead of being used immediately, they are designed to be fine-tuned to specific tasks using a comparable prompt structure. The ‘mix’ family of models, refined on various tasks, can be used for interactive testing.

Here are some examples of what PaliGemma can do: it can add captions to pictures, respond to questions about images, detect entities in pictures, segment entities within images, and reason and understand documents. These are just a few of its many capabilities.

When asked, PaliGemma can add captions to pictures. With the mix checkpoints, users can experiment with different captioning prompts to observe how they react.

PaliGemma can respond to a question about an image passed on with it.Â

PaliGemma may use the detect [entity] prompt to find entities in a picture. The bounding box coordinate location will be printed as unique tokens, where the value is an integer that denotes a normalized coordinate.Â

When prompted with the segment [entity] prompt, PaliGemma mix checkpoints can also segment entities within an image. Because the team utilizes natural language descriptions to refer to the things of interest, this technique is known as referring expression segmentation. The output is a series of segmentation and location tokens. As previously mentioned, a bounding box is represented by the location tokens. Segmentation masks can be created by processing the segmentation tokens one more time.

PaliGemma mix checkpoints are very good at reasoning and understanding documents.

he field.

Check out the Blog, Model, and Demo. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

The post Google AI Introduces PaliGemma: A New Family of Vision Language Models appeared first on MarkTechPost.

Source: Read MoreÂ