Molecular representation learning is an essential field focusing on understanding and predicting molecular properties through advanced computational models. It plays a significant role in drug discovery and material science, providing insights by analyzing molecular structures. The fundamental challenge in molecular representation learning involves efficiently capturing the intricate 3D structures of molecules, which are crucial for accurate property prediction. These structures significantly influence the physical and chemical behaviors of molecules.

Existing research in molecular representation learning has leveraged models like Denoising Diffusion Probabilistic Models (DDPMs) for generating accurate molecular structures by transforming random noise into structured data. Models such as GeoDiff and Torsional Diffusion have emphasized the importance of 3D molecular conformation, enhancing the prediction of molecular properties. Furthermore, methods integrating substructural details, like GeoMol, have improved by considering the connectivity and arrangement of atoms within molecules, advancing the field through more nuanced and precise modeling techniques.

International Digital Economy Academy (IDEA) researchers have introduced SubGDiff, a novel diffusion model aimed at enhancing molecular representation by strategically incorporating subgraph details into the diffusion process. This integration allows for a more nuanced understanding and representation of molecular structures, setting SubGDiff apart from traditional models. The key innovation of SubGDiff lies in its ability to leverage subgraph prediction within its methodology, thus allowing the model to maintain essential structural relationships and features critical for accurate molecular property prediction.

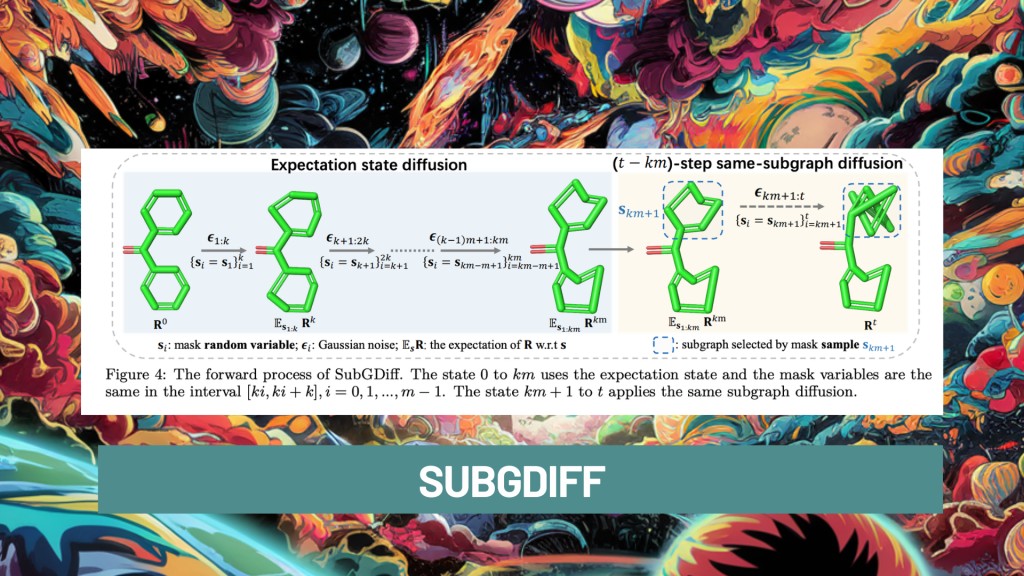

SubGDiff’s methodology centers around three principal techniques: subgraph prediction, expectation state diffusion, and k-step same-subgraph diffusion. For validation and training, the model utilizes the PCQM4Mv2 dataset, part of the larger PubChemQC project known for its extensive collection of molecular structures. SubGDiff’s approach integrates these techniques to improve the learning process by enhancing the model’s responsiveness to the intrinsic substructural features of molecules. This is achieved by employing a continuous diffusion process adjusted to focus on relevant subgraphs, thus preserving critical molecular information throughout the learning phase. This structured methodology enables SubGDiff to achieve superior performance in molecular property prediction tasks.

SubGDiff has shown impressive results in molecular property prediction, significantly outperforming standard models. In benchmark testing, SubGDiff reduced mean absolute error by up to 20% compared to traditional diffusion models like GeoDiff. Furthermore, it demonstrated a 15% increase in accuracy on the PCQM4Mv2 dataset for predicting quantum mechanical properties. These outcomes underscore SubGDiff’s effective use of molecular substructures, resulting in more accurate predictions and enhanced performance across various molecular representation tasks.

To conclude, SubGDiff significantly advances molecular representation learning by integrating subgraph information into the diffusion process. This novel approach allows for a more detailed and accurate depiction of molecular structures, leading to enhanced performance in property prediction tasks. The model’s ability to incorporate essential substructural details sets a new standard for predictive accuracy. It highlights its potential to significantly improve outcomes in drug discovery and material science, where precise molecular understanding is crucial.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

The post This AI Research Introduces SubGDiff: Utilizing Diffusion Model to Improve Molecular Representation Learning appeared first on MarkTechPost.

Source: Read MoreÂ