Text-to-image (T2I) models are central to current advances in computer vision, enabling the synthesis of images from textual descriptions. These models strive to capture the essence of the input text, rendering visual content that mirrors the intricacies described. The core challenge in T2I technology lies in the model’s ability to accurately reflect the detailed elements of textual prompts in the generated images. Despite the visual quality of the outputs, there often remains a significant discrepancy between the envisioned description and the actual image produced.

Existing research in T2I generation includes frameworks like TIFA160 and DSG1K, which utilize datasets like MSCOCO to evaluate model capabilities in spatial relationships and object counting. PartiP. and DrawBench has furthered this by focusing on compositional and text rendering challenges, respectively. Prominent models such as CLIP, Imagen, and Muse have advanced the quality and alignment of generated images. These models, often trained on extensive datasets, represent significant milestones in assessing and enhancing the interpretative capabilities of T2I technologies.

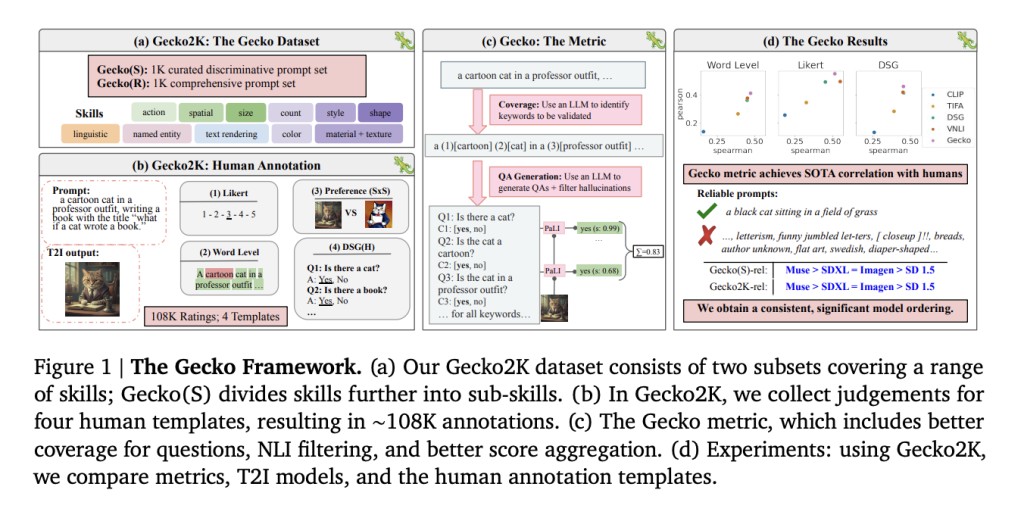

Researchers from Google DeepMind and Google Research have introduced the Gecko framework, designed to significantly refine the evaluation process of T2I models. Unique to Gecko is its use of a QA-based auto-evaluation metric, which correlates more accurately with human judgments than prior metrics. This approach allows for a nuanced assessment of how well images align with textual prompts, making it possible to identify specific areas where models excel or fail.

The methodology behind the comprehensive Gecko framework involves rigorous testing of T2I models using the extensive Gecko2K dataset, which includes the Gecko(R) and Gecko(S) subsets. Gecko(R) ensures broad evaluation coverage by sampling from well-established datasets like MSCOCO, Localized Narratives, and others. Conversely, Gecko(S) is meticulously designed to test specific sub-skills, enabling focused assessments of models’ abilities in nuanced areas such as text rendering and action understanding. Models such as SDXL, Muse, and Imagen are evaluated against these benchmarks using a set of over 100,000 human annotations, ensuring the evaluations reflect accurate image-text alignment.

The Gecko framework demonstrated its efficacy with quantitative improvements over previous models in rigorous testing. For example, Gecko achieved a correlation improvement of 12% compared to the next best metric when matched against human judgment ratings across multiple templates. Detailed analysis showed that specific model discrepancies were detected under Gecko with an 8% higher accuracy in image-text alignment. Additionally, in evaluations across a dataset of over 100,000 annotations, Gecko reliably enhanced model differentiation, reducing misalignments by 5% compared to standard benchmarks, confirming its robust capability in assessing T2I generation accuracy.

To conclude, the research introduces Gecko, an innovative QA-based evaluation metric and a comprehensive benchmarking system that significantly enhances the accuracy of T2I model evaluations. Gecko represents a substantial advancement in evaluating generative models by achieving a closer correlation with human judgments and providing detailed insights into model capabilities. This research is crucial for future developments in AI, ensuring that T2I technologies produce more accurate and contextually appropriate visual content, thus improving their applicability and effectiveness in real-world scenarios.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

The post This AI Paper by DeepMind Introduces Gecko: Setting New Standards in Text-to-Image Model Assessment appeared first on MarkTechPost.

Source: Read MoreÂ