In the ever-evolving field of machine learning, developing models that predict and explain their reasoning is becoming increasingly crucial. As these models grow in complexity, they often become less transparent, resembling “black boxes†where the decision-making process is obscured. This opacity is problematic, particularly in sectors like healthcare and finance, where understanding the basis of decisions can be as important as understanding the decisions themselves.

One fundamental issue with complex models is their lack of transparency, which complicates their adoption in environments where accountability is key. Traditionally, methods to increase model transparency have included various feature attribution techniques that explain predictions by assessing the importance of input variables. However, these methods often suffer from inconsistencies; for example, results may vary significantly across different runs of the same model on identical data.

Researchers have developed gradient-based attribution methods to tackle these inconsistencies, but they, too, have limitations. These methods can provide divergent explanations for the same input under different conditions, undermining their reliability and the trust users place in the models they aim to elucidate.

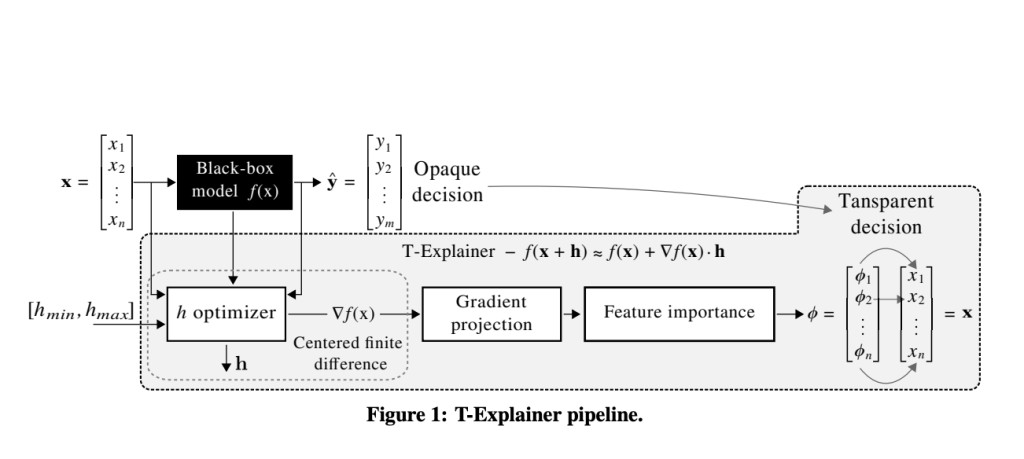

Researchers from the University of São Paulo (ICMC-USP), New York University, and Capital One introduced a new approach known as the T-Explainer. This framework focuses on local additive explanations based on the robust mathematical principles of Taylor expansions. It aims to maintain high accuracy and consistency in its explanations. Unlike other methods that might fluctuate in their explanatory output, the T-Explainer operates through a deterministic process that ensures stability and repeatability in its results.

The T-Explainer not only pinpoints which features of a model influence predictions but does so with a precision that allows for deeper insight into the decision-making process. Through a series of benchmark tests, the T-Explainer demonstrated its superiority over established methods like SHAP and LIME regarding stability and reliability. For instance, in comparative evaluations, T-Explainer consistently showed an ability to maintain explanation accuracy across multiple assessments, outperforming others in stability metrics such as Relative Input Stability (RIS) and Relative Output Stability (ROS).

The T-Explainer integrates seamlessly with existing frameworks, enhancing its utility. It has been applied effectively across various model types, showcasing flexibility that is not always present in other explanatory frameworks. Its ability to provide consistent and understandable explanations enhances the trust in AI systems and facilitates a more informed decision-making process, making it invaluable in critical applications.

In conclusion, the T-Explainer emerges as a powerful solution to the pervasive opacity issue in machine learning models. By leveraging Taylor expansions, this innovative framework offers deterministic and stable explanations that surpass existing methods like SHAP and LIME regarding consistency and reliability. The results from various benchmark tests confirm T-Explainer’s superior performance, significantly enhancing the transparency and trustworthiness of AI applications. As such, the T-Explainer addresses the critical need for clarity in AI decision-making processes and sets a new standard for explainability, paving the way for more accountable and interpretable AI systems.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

The post This Machine Learning Paper from ICMC-USP, NYU, and Capital-One Introduces T-Explainer: A Novel AI Framework for Consistent and Reliable Machine Learning Model Explanations appeared first on MarkTechPost.

Source: Read MoreÂ