Artificial Intelligence (AI) is a rapidly expanding field with new daily applications. However, ensuring these models’ accuracy and dependability continues to be a difficult task. Conventional AI assessment techniques are frequently cumbersome and require extensive manual setup, which impedes ongoing development and disrupts developers’ workflows. There is no set framework, application, or set of rules for testing and working together on models. Engineers are mostly left to manually sift through failing rows before deployment to comprehend and enhance models.

OpenLayer’s Innovative Solution

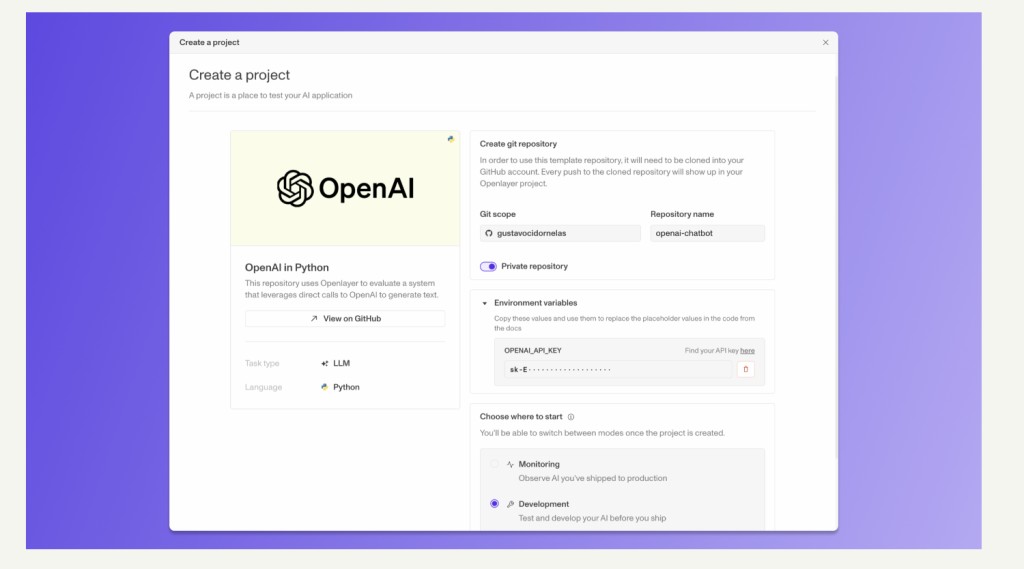

Meet Openlayer, an evaluation tool that fits into your development and production pipelines to help you ship high-quality models with confidence. Openlayer seeks to transform AI assessment by providing a straightforward, automated methodology that can be easily incorporated into current development procedures.

Openlayer’s solution is very simple. Developers only need to establish a series of “must-pass†tests for their AI system and connect their Github repository to Openlayer. Developers can generate their unique tests or select from a library of more than 100 pre-built alternatives for these tests. Once integrated, each code commit automatically triggers these tests on Openlayer’s platform. This guarantees ongoing assessment without requiring the developer to put in extra work.Â

Key Features and Benefits of Openlayer

Openlayer makes the assessment process more efficient, enabling developers to find and fix bugs frequently. This increases the general caliber and dependability of AI models and frees up crucial development time that may be used for creative endeavors.Â

Developers may track, test, and deploy models with the aid of Openlayer. It works with the current workflows.Â

Openlayer provides features like automatic testing and real-time monitoring.Â

Through the platform, users can work together and monitor their progress.Â

Openlayer provides on-premise hosting and complies with SOC 2.Â

Rounds of FundingÂ

Openlayer recently announced that it has raised $4.8 million in a seed round headed by Quiet Capital, with additional funding from Ground Up VC, Y Combinator, Picus Capital, Hack VC, Liquid2 Ventures, Soma Capital, and Mantis VC.Â

Key TakeawaysÂ

Because too much human setup and customization is required, all AI evaluation systems are cumbersome and disrupt developers’ workloads.

An assessment tool called Openlayer aids developers in raising the caliber of their Machine Learning models.

Developers can use it to track, test, and implement models.

Openlayer includes capabilities like real-time monitoring and automated testing, connecting with existing workflows.

Through the platform, developers can work together and monitor their progress.Â

In ConclusionÂ

Openlayer convincingly simplifies the frequently difficult process of evaluating AI. It gives developers the tools to create more dependable and durable Machine Learning models by providing automated testing, real-time monitoring, and seamless integration. With its emphasis on developer experience and dedication to security, Openlayer offers itself as a useful tool for the advancement of AI development.

The post Meet Openlayer: An AI Evaluation Tool that Fits into Development and Production Pipelines to Help Ship High-Quality Models with Confidence appeared first on MarkTechPost.

Source: Read MoreÂ