Building data sources on Amazon Simple Storage Service (Amazon S3) can provide substantial benefits for analysis pipelines because it allows you to access multiple large data sources, optimize the curation of new ingestion pipelines, build artificial intelligence (AI) and machine learning (ML) models, providing customised experiences for customers and consumers alike.

In this post, we show you custom configurations at the AWS Database Migration Service (AWS DMS) and Amazon S3 level to optimize loads to reduce target latency. Every replication is unique, and it’s critical to test these changes on your environment for analysis before any changes in production systems. DMS Tasks share replication instance resources such as memory and CPU so each extra connection attributes (ECAs), task level parameters or task settings can impact not just the current operation but also other operations in the same replication instance.

How to troubleshoot latency

In any logical replication process, the major steps include collecting data from your source, processing changes at the replication level (in the case of AWS DMS, these are done at the replication instance), and preparing and sending data to the desired target. Because AWS DMS replicates data asynchronously, any issues such as sudden increase of changes, long-running queries or resource constraints can generate replication gaps between the time a change is committed on your source and is available on your target—these gaps are what we call latency.

You can learn more about observability here, general source or target latency issues, and how to monitor and address common causes of latency in here. In our case, we perform the following troubleshooting steps:

Identify if the delay is at either at the source capture or Amazon S3 (target) end. You can achieve this by checking the values for CDCLatencySource and CDCLatencyTarget, respectively.

Confirm that there are enough resources on your replication instance. Check if Amazon CloudWatch metrics such as CPUUtilization, FreeableMemory and SwapUsage, ReadIOPS and WriteIOPS, and ReadLatency and WriteLatency are experiencing spikes or consistently high values. Regarding AWS DMS memory, it’s especially critical to adequately replicate sudden increases or large volumes of CDC operations.

Review Amazon S3 general settings and performance, such as using S3 buckets that are as geographically close as possible to or in the same AWS Region as your AWS DMS replication. Although S3 buckets can support thousands of requests per second per prefix, make sure to follow all the guidelines for Amazon S3 optimization.

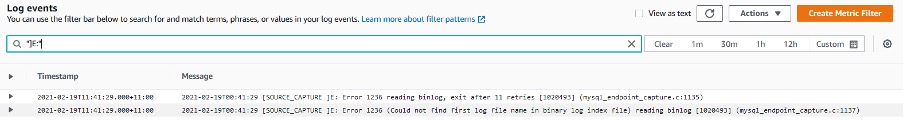

You can also check the DMS task logs from your process in order to make sure there are no errors or warning messages such as client disconnections, replication issues or messages related to latency.

To be able to check your task logs via Cloudwatch, you must first enable them. The following guide provides more information on how to complete this step

After you complete the previous step or if you have previously done it, the logs will be available in the AWS DMS Console, under “Database Migration Tasksâ€, select your task, click on the “Overview details†tab and then under “Task Logsâ€, click on “View Cloudwatch Logsâ€

From the list of logs you can use search patterns such as a table name or error and warning messages using the expressions “]E:†or “]W:â€

Here is an example of the messages you might see; we will dive into a common case of CDC stopped and which warnings are generated later in this article.

For this post, we assume that you have addressed these considerations, that we consider a scenario where only target latency is present, that we have optimized the Amazon S3 settings, and that we have also confirmed that there are enough resources at the replication instance level to handle the workload.

Use case overview

In this scenario, we are facing contention issues during replication to Amazon S3. Our initial observations are as follows:

We have multiple Oracle and MySQL source databases on premises and on Amazon Relational Database Service (Amazon RDS), and some of these are showing a heavy transactional profile.

Binary log and archive generation is fluctuating from 20gb/hr up to 100gb/hr per hour at peak times.

As per our initial investigation, there was no source latency and we had provided enough resources at the replication level (CDCLatencySource near or zero and CDCLatencyTarget fluctuating above the accepted defined threshold, which in our case scenario is 15 minutes)

The AWS DMS task and S3 endpoints were configured with the default settings of the target. During peak times, the CDCLatencyTarget metric would reach thousands of seconds and would keep steadily increasing, which resulted in the AWS DMS task being forced to pause reading changes from the source.

These pauses were caused by the current influx of changes, coupled with the delay in processing and AWS DMS settings, which allows the transactions to stay in memory for a finite amount of time (in order to not overflow the resource and impact other processes). These issues can be identified by intermittent messages in AWS DMS task logs, such as: “[SORTER ]I: Reading from source is paused. Total storage used by swap files exceeded the limit 1048576000 bytes (sorter_transaction.c:110).â€

Source latency also increases because AWS DMS can’t apply the current changes and it has to halt the CDC capture.

The final result is a constant loop of stuck changes, which impacts the overall latency and customer experience with the replication as a whole, because, in this scenario, end-users can’t have the data in the expected window of 15 minutes.

To illustrate this scenario the following screenshot shows some of the key metrics that you can check on your task directly via DMS Console:

You can also check resource metrics for the replication instance such as Memory, IOPs and network bandwidth in the replication instance level at the Cloudwatch metrics tab:

How to address target performance issues

To address the aforementioned issues, you can perform the following steps. It is important to remind here, as done previously, that these values are examples based in this specific scenario and might not work exactly the same in your replication since every process is unique; it is critical to test these changes on an QA/DEV environment, perform a thorough analysis and only then apply the same changes in any production workloads.

Stop any current AWS DMS tasks related to the same S3 target.

At the S3 target DMS endpoint level, change the following settings to control the frequency of writes:

cdcMaxBatchInterval – The maximum interval length condition, defined in seconds, to output a file to Amazon S3. Change the default value from 60 seconds to 20 seconds.

cdcMinFileSize – The minimum file size condition as defined in kilobytes to output a file to Amazon S3. Change the default value of 32,000 KB to 16,000 KB.

At the AWS DMS task level, adjust the following settings the current CDC task:

MinTransactionSize – The minimum number of changes to include in each transaction. Change the default from 1,000 to 3,000.

CommitTimeout – The maximum time in seconds for AWS DMS to collect transactions in batches before declaring a timeout. Change the default value of 1 second to up to 5 seconds.

Resume the tasks to apply the new changes.

In this scenario, we optimized the endpoint target writing capabilities by lowering the thresholds for the time and size needed to trigger the transfer events with the cdcMaxBatchInterval and cdcMinFileSize settings, respectively. Any tasks using the same S3 endpoint will respect closing and creating the CDC files every 20 seconds if the file size hasn’t exceeded 16 MB. This combination of parameters is a racing condition; the file write is triggered by whichever threshold is met first. Reducing this value can also increase the amount of files being generated on your S3 target.

The second configuration was done at a task level, which means any specific task needs to be changed if you want to have the same values. To reduce the likelihood of Reading from source issues and considering the preceding thresholds from the endpoint, we can allow transactions to stay in memory for longer. The tasks will now hold up to 3,000 transactions or 5 seconds before considering if it needs to send these to the replication instance storage.

Results and additional configurations

After we applied the preceding changes, the replication streams from both sources went down to an average latency target of 3–10 seconds as we can confirm on the following screenshot (A reduction of 99% from the 15 minutes window previously defined). The speed-up of the processed changes also meant a reduction on the total memory used, thereby reducing the CDC stop capturing issues.

If the message Reading from source is paused. Total storage used by swap files exceeded the limit still occurs, consider increasing the overall memory settings for any particular task. This will keep transactions in memory for longer, according to the following settings:

MemoryLimitTotal – Sets the maximum size (in MB) that all transactions can occupy in memory before being written to disk. The default value is 1,024. Increasing this parameter to a higher value will help use an instance’s available memory effectively.

MemoryKeepTime – Sets the maximum time in seconds that each transaction can stay in memory before being written to disk. The duration is calculated from the time that AWS DMS started capturing the transaction. The default value is 60 seconds. Increasing this value will help keep transactions in memory for longer.

These changes also increase the total memory allocation for each individual task, so choosing the right instance class is critical. Due to memory being one of the most critical resources on a replication instance, Memory-optimized instances (R-class) tend to be the preferred choice for production in critical and migration intensive resource environments, but the type and ideal size can vary based on several other factors. Also, if both values are not met the task will have to write into disk eventually, which can lead to latency issues on its own.

Conclusion

In this post, we demonstrated how to optimize your AWS DMS tasks when considering large data change volumes and using Amazon S3 as a target. Try out this solution for your own use case, and review the best practices to take advantage of other features on AWS DMS, including general logging, context logging, observability via CloudWatch metrics, and automation and notification via Amazon EventBridge and Amazon SNS. If none of the setting discussed here solve your issues you can also check all the links provided throughout the article for more options and you can reach out to AWS support so our database SMEs can guide you through.

About the Author

Felipe Gregolewitsch is a Senior DB Migration Specialist SA with Amazon Web Services and SME for RDS core services. With more than 14 years of experience in database migrations covering projects in South America and Asia/Pacific, Felipe helps on enabling customers on their migration and replication journeys from multiple engines and platforms like relational, data lakes, and data warehouses by leveraging options ranging from native tools up to AWS DMS.

Source: Read More