Google AI researchers showed how a joint model combining sound separation and ASR could benefit from hybrid datasets, including large amounts of simulated audio and small amounts of real recordings. This approach achieves accurate speech recognition on augmented reality (AR) glasses, particularly in noisy and reverberant environments. This is an important step for enhancing communication experiences, especially for individuals with hearing impairments or those conversing in non-native languages. Traditional methods face difficulties in separating speech from background noise and other speakers, necessitating innovative approaches to improve speech recognition performance on AR glasses.

Traditional methods rely on recorded impulse responses (IRs) from actual environments, which are time-consuming and challenging to collect at scale. In contrast, using simulated data allows for the quick and cost-effective generation of large amounts of diverse acoustics data. GoogleAI’s researchers propose leveraging a room simulator to build simulated training data for sound separation models, complementing real-world data collected from AR glasses. By combining a small amount of real-world data with simulated data, the proposed method aims to capture the unique acoustic properties of the AR glasses while enhancing model performance.

The proposed method involves several key steps. Firstly, real-world IRs are collected using AR glasses in different environments, capturing the specific acoustic properties relevant to the device. Then, a room simulator is extended to generate simulated IRs with frequency-dependent reflections and microphone directivity, enhancing the realism of the simulated data. The researchers develop a data generation pipeline to synthesize training datasets, mixing reverberant speech and noise sources with controlled distributions.Â

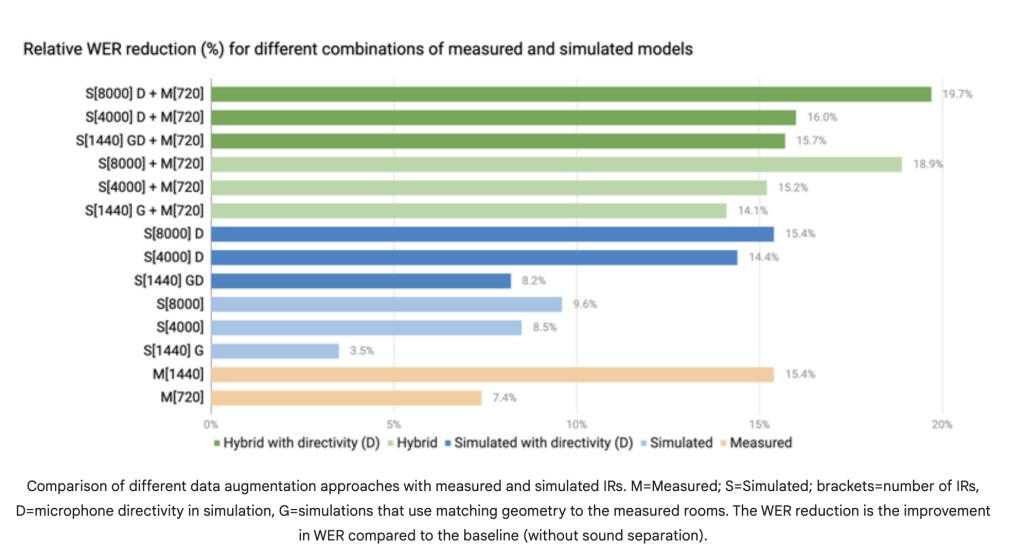

Experimental results demonstrate significant improvement in speech recognition performance when using the hybrid dataset, consisting of both real-world and simulated IRs. The models trained on the hybrid dataset also do better than models trained only on real-world or simulated data, showing that the proposed method works. Furthermore, adding microphone directivity in the simulation further enhances model training, reducing the reliance on real-world data.

In conclusion, the paper presents a novel approach to addressing the challenge of speech recognition on AR glasses in noisy and reverberant environments. The proposed method offers a cost-effective solution for enhancing model performance by leveraging a room simulator to generate simulated training data. The hybrid dataset, consisting of both real-world and simulated IRs, allows for the capture of device-specific acoustic properties while reducing the need for extensive real-world data collection. Overall, the study shows that simulation-based methods can be useful for making speech recognition systems for wearable devices.

Check out the Paper and Google Blog. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

For Content Partnership, Please Fill Out This Form Here..

The post Improving Speech Recognition on Augmented Reality Glasses with Hybrid Datasets Using Deep Learning: A Simulation-Based Approach appeared first on MarkTechPost.

Source: Read MoreÂ