The fields of Artificial Intelligence (AI) and Deep Learning have experienced significant growth in recent times. Following deep learning’s domination, the Transformer architecture has become a powerhouse, demonstrating exceptional performance in a variety of downstream tasks as well as pre-trained big models.Â

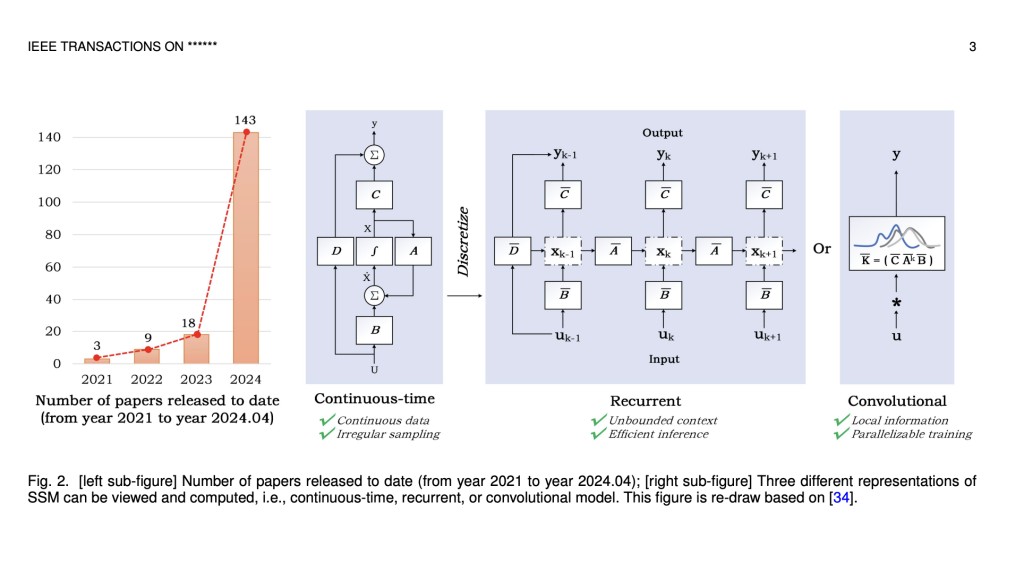

However, for many researchers and practitioners, Transformers’ high processing resource requirements have proven to be a major obstacle. As a result, efforts have been focused on developing more effective techniques to simplify attention models. Of them, the State Space Model (SSM) has drawn the most interest as a possible substitute for the Transformer’s self-attention mechanism.

A recent study by IEEE has provided the first thorough analysis and comparison of these efforts, highlighting the benefits and characteristics of SSM through experimental comparisons and analyses. The team of researchers has included a thorough discussion of its guiding principles in their research paper. It also includes a detailed analysis of current SSMs and their various applications across various domains, such as computer vision, graph analysis, multi-modal and multi-media tasks, point cloud and event stream processing, time series analysis, and Natural Language Processing (NLP), among other pertinent fields.

In addition, statistical comparisons and analyses of these SSM models have been included in the paper with the goal of shedding light on the relative effectiveness of various structural changes for different tasks. The team has shared that the purpose of the study is to help the AI community understand the subtleties of various designs and their applicability for particular applications by providing insight into the comparative performance of SSMs.

The team has summarized their primary contributions as follows.

A basic overview and knowledge of the State Space concept has been outlined along with major principles of the SSM.

The origins, adaptations, and uses of SSMs in a variety of fields, including computer vision, graph analysis, natural language processing, and more, have been discussed.Â

Extensive experiments spanning several downstream tasks have been carried out to evaluate the effectiveness of SSMs. These tasks include image-to-text creation, pixel-level segmentation, visual object tracking, person/vehicle re-identification, and single- and multi-label classification.

In conclusion, the study’s overall goal is to present a thorough review of SSMs while also providing insightful analysis, comparative viewpoints, and recommendations for future research to further this field of study. The study has suggested future directions for this field of study to promote the development of theoretical knowledge and real-world applications of SSM. It has highlighted how crucial it is to carry out more research and innovation in this area in order to maximize potential and advance the field.

Check out the Paper and Github. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

For Content Partnership, Please Fill Out This Form Here..

The post A Detailed AI Study on State Space Models: Their Benefits and Characteristics along with Experimental Comparisons appeared first on MarkTechPost.

Source: Read MoreÂ