In deep learning, a unifying framework to design neural network architectures has been a challenge and a focal point of recent research. Earlier models have been described by the constraints they must satisfy or the sequence of operations they perform. This dual approach, while useful, has lacked a cohesive framework to integrate both perspectives seamlessly.Â

The researchers tackle the core issue of the absence of a general-purpose framework capable of addressing both the specification of constraints and their implementations within neural network models. They highlight that current methods, including top-down approaches that focus on model constraints and bottom-up approaches that detail the operational sequences, fail to provide a holistic view of neural network architecture design. This disjointed approach limits developers’ ability to design efficient and tailored models to the unique data structures they process.

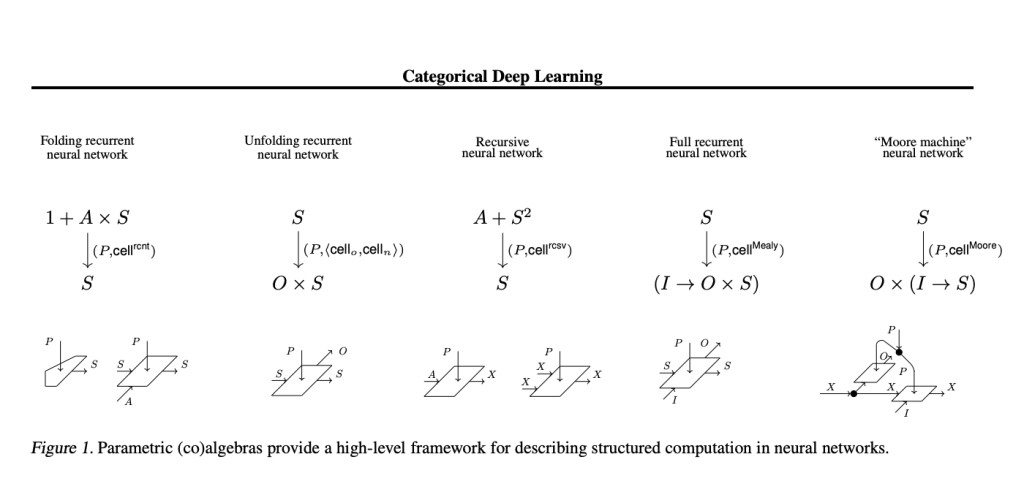

The researchers from Symbolic AI, the University of Edinburgh, Google DeepMind, and the University of Cambridge introduce a theoretical framework that unites the specification of constraints with their implementations through monads valued in a 2-category of parametric maps. They have proposed a solution grounded in category theory, aiming to create a more integrated and coherent methodology for neural network design. This innovative approach encapsulates the diverse landscape of neural network designs, including recurrent neural networks (RNNs), and offers a new lens to understand and develop deep learning architectures. By applying category theory, the research captures the constraints used in Geometric Deep Learning (GDL) and extends beyond to a wider array of neural network architectures.

The proposed framework’s effectiveness is underscored by its ability to recover constraints utilized in GDL, demonstrating its potential as a general-purpose framework for deep learning. GDL, which uses a group-theoretic perspective to describe neural layers, has shown promise across various applications by preserving symmetries. However, it encounters limitations when faced with complex data structures. The category theory-based approach overcomes these limitations and provides a structured methodology for implementing diverse neural network architectures.

The Centre of this research is applying category theory to understand and create neural network architectures. This approach enables the creation of neural networks that are more closely aligned with the structures of the data they process, enhancing both the efficiency and effectiveness of these models. The research highlights the universality and flexibility of category theory as a tool for neural network design, offering new insights into the integration of constraints and operations within neural network models.

In conclusion, this research introduces a groundbreaking framework based on category theory for designing neural network architectures. By bridging the gap between the specification of constraints and their implementations, the framework offers a comprehensive approach to neural network design. The application of category theory not only recovers and extends the constraints used in frameworks like GDL but also opens up new avenues for developing sophisticated neural network architectures.Â

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 39k+ ML SubReddit

The post Unifying Neural Network Design with Category Theory: A Comprehensive Framework for Deep Learning Architecture appeared first on MarkTechPost.

Source: Read MoreÂ