A critical challenge in Artificial intelligence, specifically regarding large language models (LLMs), is balancing model performance and practical constraints like privacy, cost, and device compatibility. While large cloud-based models offer high accuracy, their reliance on constant internet connectivity, potential privacy breaches, and high costs pose limitations. Moreover, deploying these models on edge devices introduces challenges in maintaining low latency and high accuracy due to hardware limitations.

Existing work includes models like Gemma-2B, Gemma-7B, and Llama-7B, as well as frameworks such as Llama cpp and MLC LLM, which aim to enhance AI efficiency and accessibility. Projects like NexusRaven, Toolformer, and ToolAlpaca have advanced function-calling in AI, striving for GPT-4-like efficacy. Techniques like LoRA have facilitated fine-tuning under GPU constraints. However, these efforts often must grapple with a crucial limitation: achieving a balance between model size and operational efficiency, particularly for low-latency, high-accuracy applications on constrained devices.

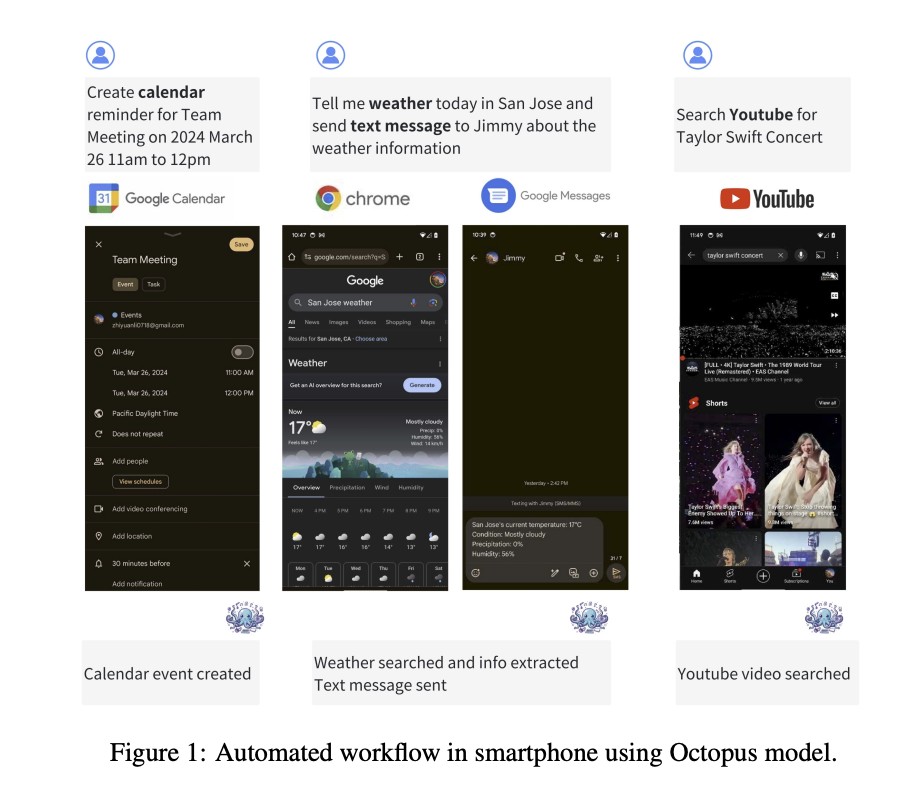

Researchers from Stanford University have introduced Octopus v2, an advanced on-device language model aimed at addressing the prevalent issues of latency, accuracy, and privacy concerns associated with current LLM applications. Unlike previous models, Octopus v2 significantly reduces latency and enhances accuracy for on-device applications. Its uniqueness lies in the fine-tuning method with functional tokens, enabling precise function calling and surpassing GPT-4 in efficiency and speed while dramatically cutting the context length by 95%.

The methodology for Octopus v2 involved fine-tuning a 2 billion parameter model derived from Google DeepMind’s Gemma 2B on a tailored dataset focusing on Android API calls. This dataset was constructed with positive and negative examples to enhance function calling precision. The training incorporated full model and Low-Rank Adaptation (LoRA) techniques to optimize performance for on-device execution. The key innovation was the introduction of functional tokens during fine-tuning, significantly reducing latency and context length requirements. This process allowed Octopus v2 to achieve high accuracy and efficiency in function calling on edge devices without extensive computational resources.

In benchmark tests, Octopus v2 achieved a 99.524% accuracy rate in function-calling tasks, markedly outperforming GPT-4. The model also showed a dramatic reduction in response time, with latency minimized to 0.38 seconds per call, representing a 35-fold improvement compared to previous models. Furthermore, it required 95% less context length for processing, showcasing its efficiency in handling on-device operations. These metrics underline Octopus v2’s advancements in reducing operational demands while maintaining high-performance levels, positioning it as a significant advancement in on-device language model technology.

To conclude, Stanford University researchers have demonstrated that the development of Octopus v2 marks a significant leap forward in on-device language modeling. By achieving a high function calling accuracy of 99.524% and reducing latency to just 0.38 seconds, Octopus v2 addresses key challenges in on-device AI performance. Its innovative fine-tuning approach with functional tokens drastically reduces context length, enhancing operational efficiency. This research showcases the model’s technical merits and potential for broad real-world applications.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 39k+ ML SubReddit

The post Researchers at Stanford University Introduce Octopus v2: Empowering On-Device Language Models for Super Agent Functionality appeared first on MarkTechPost.

Source: Read MoreÂ